Objects as Points

Abstract

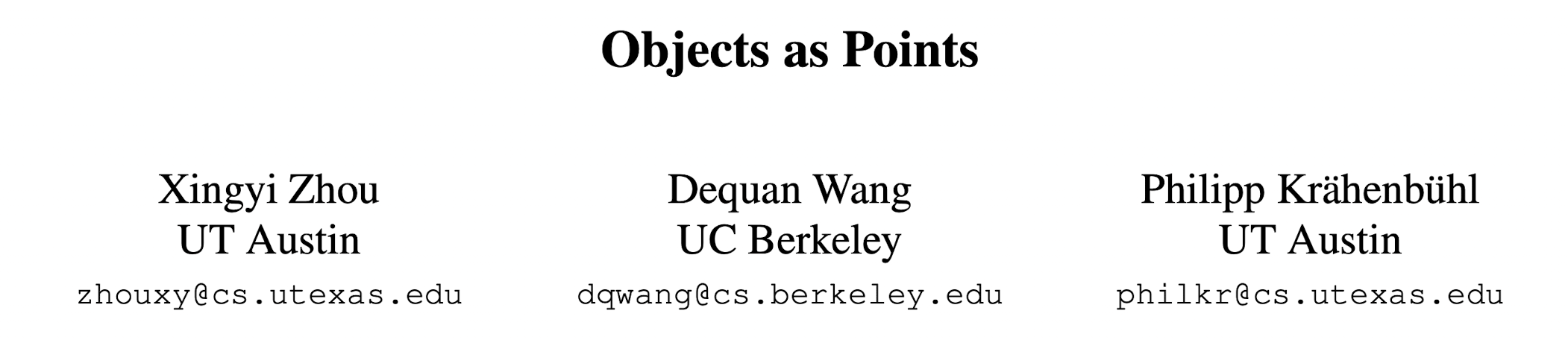

They model an object as a single point -- the center point of its bounding box. The detector uses key-point estimation to find center points and regresses to all other object properties, such as size, 3D location, orientation, and even pose.

Introduction

Current object detectors represent each object through an axis-aligned bounding box that tightly encompasses the object. They then reduce object detection to image classification of an extensive number of potential object bounding boxes. For each bounding box, the classifier determines if the image content is a specific object or background.

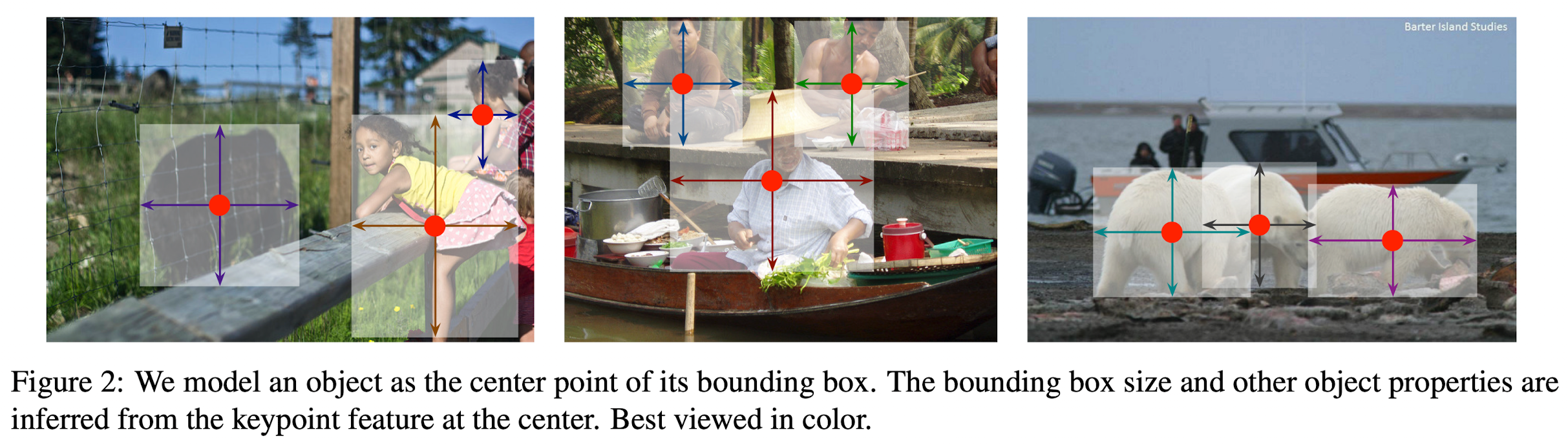

- One-stage detectors slide a complex arrangement of possible bounding boxes, called anchors, over the image and classify them directly without specifying the box content.

- Two-stage detectors recompute image features for each potential box, then classify those features.

Post-processing, namely non-maxima suppression, then removes duplicated detections for the same instance by computing bounding box IoU.

Warning

This post-processing is hard to differentiate and train, hence most current detectors are not end-to-end trainable.

Sliding window based object detectors are however a bit wasteful, as they need to enumerate all possible object locations and dimensions.

In this paper, they provide a much simpler and more efficient alternative. They represent objects by a single point at their bounding box center.Other properties, such as object size, dimension, 3D extent, orientation, and pose are then regressed directly from image features at the center location. Object detection is then a standard key-point estimation problem.

- They simply feed the input image to a fully convolutional network that generates a heatmap. Peaks in this heatmap correspond to object centers.

- Image features at each peak predict the objects bounding box height and weight.

Hint

Inference is a single network forward-pass, without non-maximal suppression for post-processing.

Related work

Anchor-based Detector

Their approach is closely related to anchor-based one-stage approaches.

Hint

A center point can be seen as a single shape-agnostic anchor.

However, there are a few important differences.

- CenterNet assigns the "anchor" based solely on location, not box overlap. They have no manual thresholds for foreground and background classification.

- They only have one positive "anchor" per object, and hence do not need Non-Maxima Suppression (NMS).

- CenterNet uses a larger output resolution (output stride of 4) compared to traditional object detectors (output stride of 16). This eliminates the need for multiple anchors.

Object detection by key-point estimation

CornerNet detects two bounding box corners as key points, while ExtremeNet detects the top-, left-, bottom-, right-most, and center points of all objects. ==Both these methods build on the same robust key point estimation network as CenterNet.

Warning

However, they require a combinatorial grouping stage after key point detection, which significantly slows down each algorithm.

Preliminary

They use several different fully-convolutional encoder-decoder networks to predict key points from an image: a stacked hourglass network, up-convolutional residual networks (ResNet), and deep layer aggregation (DLA).

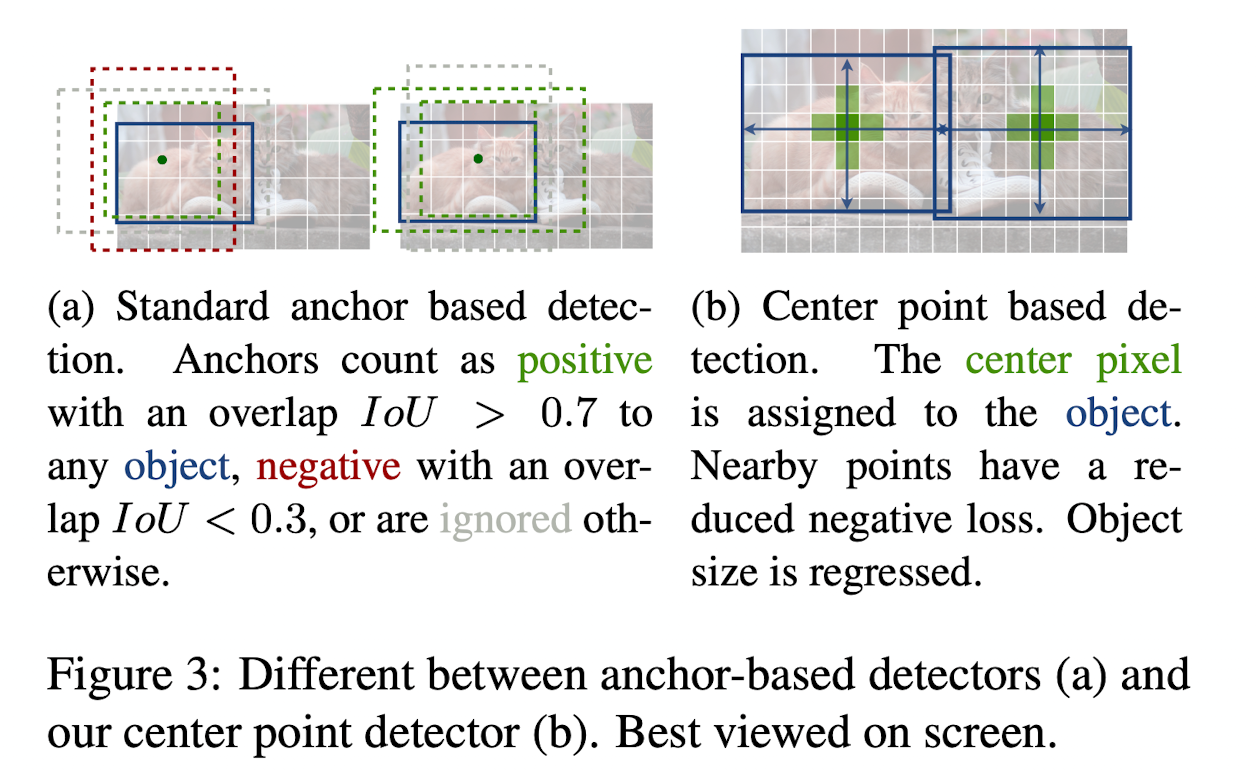

They train the key point prediction network following CornerNet.

- For each ground truth key point, they compute a low-resolution equivalent.

- They then splat all ground truth key points onto a heatmap using a Gaussian kernel, where \(\sigma_p\) is an object size-adaptive standard deviation.

- If two Gaussian distributions of the same class overlap, they take the element-wise maximum.

- The training objective is a penalty-reduced pixel wise logistic regression with focal loss.

\(N\) is the number of key points in the image, and the normalization by \(N\) is chosen as to normalize all positive focal loss instances to 1.

Note

To recover the discretization error caused by the output stride, they additionally predict a local offset for each center point.

All classes \(c\) share the same offset prediction.

Objects as Points

To limit the computational burden, they use a single size predictor for all object categories.

From points to bounding boxes

- At inference time, they first extract the peaks in the heatmap for each category independently.

- They detect all responses whose value is greater or equal to its 8-connected neighbors and keep the top 100 peaks.

- They use the key point values as a measure of its detection confidence.

Note

The peak key point extraction serves as a sufficient NMS alternative and can be implemented efficiently on device using a 3 x 3 max pooling operation.